Context Engineering: Beyond Prompts

Why the most important shift in AI development isn't about better prompts, but about the systems we build around them.

A consensus is forming among the builders of AI, and it started with a simple, powerful idea. Shopify CEO Tobi Lütke put it best when he said:

“I really like the term ‘context engineering’ over prompt engineering. It describes the core skill better: the art of providing all the context for the task to be plausibly solvable by the LLM.”

Andrej Karpathy, one of the most respected minds in AI, quickly agreed, calling it the “delicate art and science of filling the context window with just the right information for the next step.”

This isn't just a semantic debate for developers. It’s a crucial evolution in how we think about, design, and build applications with Large Language Models. Understanding this shift is the key to moving from creating clever demos to engineering reliable products.

Defining the Terms: What's the Real Difference?

To see why this distinction is so important, we first have to define the terms clearly.

Prompt Engineering is the art of crafting a specific instruction or query to elicit a desired response from an LLM in a single turn. It focuses on the clarity, specificity, and format of the direct command given to the model. Think of it as asking the perfect question.

Context Engineering is the broader discipline of designing and building systems that provide the LLM with a complete universe of information it needs to perform a task. The prompt is just one part of this universe. Context engineering is about setting the entire stage so the model can act intelligently upon it.

If prompt engineering is writing the script for a single line, context engineering is the work of the director, the set designer, and the casting agent combined. It's about ensuring the actor has everything they need—the script, the props, the backstory—before the camera starts rolling.

Why the Distinction Matters

Separating these two concepts is vital because it shifts our mindset from wordsmithing to systems architecture. This has three immediate and important implications for anyone building with AI.

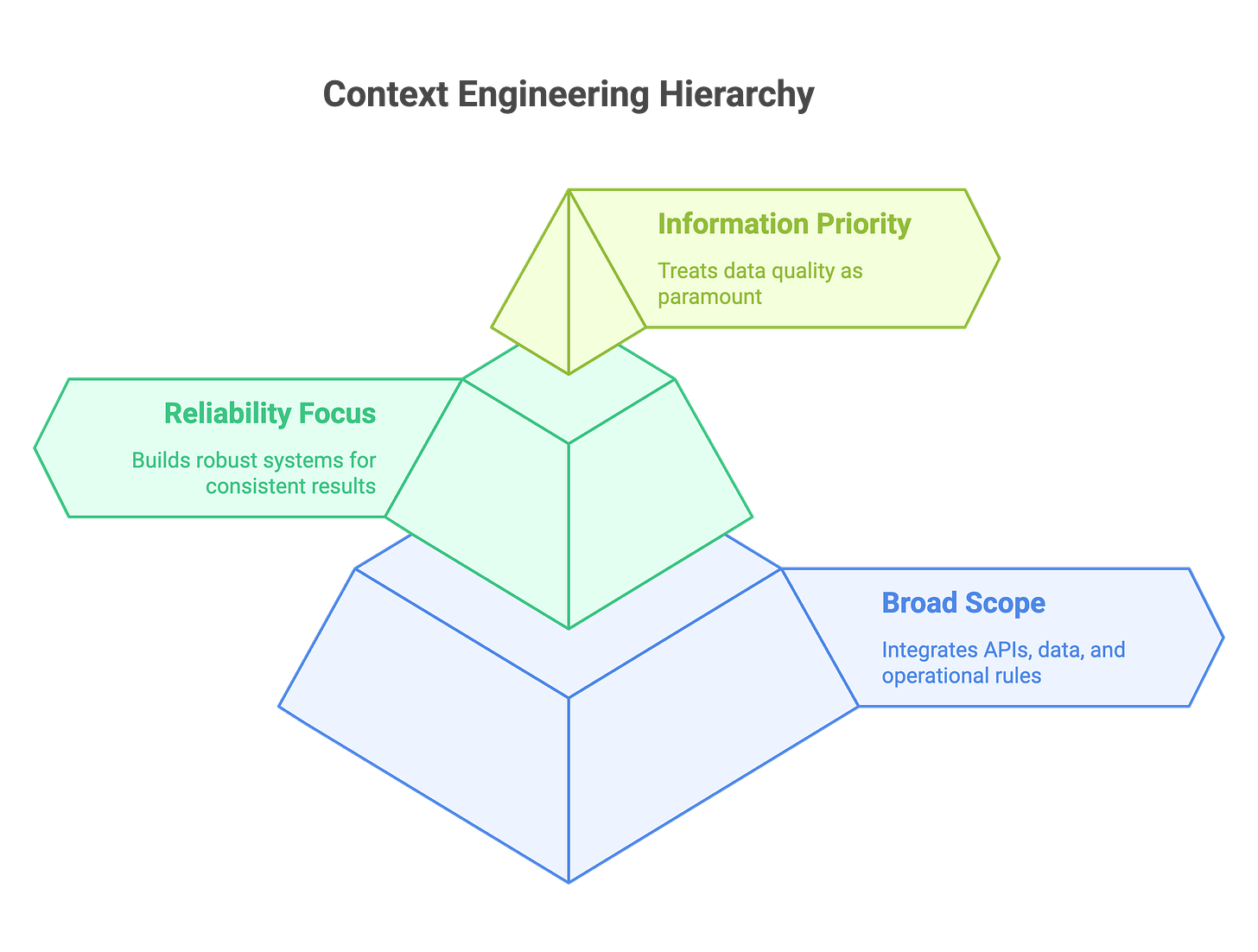

It Broadens the Scope of Work. "Prompt engineering" sounds like a task for a writer. "Context engineering" accurately describes the work of an engineer. It acknowledges that building with LLMs involves not just writing text, but integrating APIs, managing data retrieval pipelines, handling conversational state, and defining complex operational rules.

It Focuses on Reliability over Tricks. A clever prompt can produce a magical result once, but it's often brittle. When it fails, the only solution is to tweak the words and try again. Context engineering, by contrast, focuses on building a robust system. When an application fails, an engineer can debug the entire context pipeline. Was the data from the RAG system incorrect? Did the conversational memory get dropped? Was the tool definition unclear? This system-level approach is the only path to creating reliable, production-grade AI.

It Separates the Instruction from the Information. The core job of an LLM is to reason over information given an instruction. Prompt engineering focuses solely on the instruction. Context engineering professionalizes the craft by treating the information as a first-class citizen. It says that the quality of the data we provide is just as, if not more, important than the quality of the question we ask.

The Components of Engineered Context

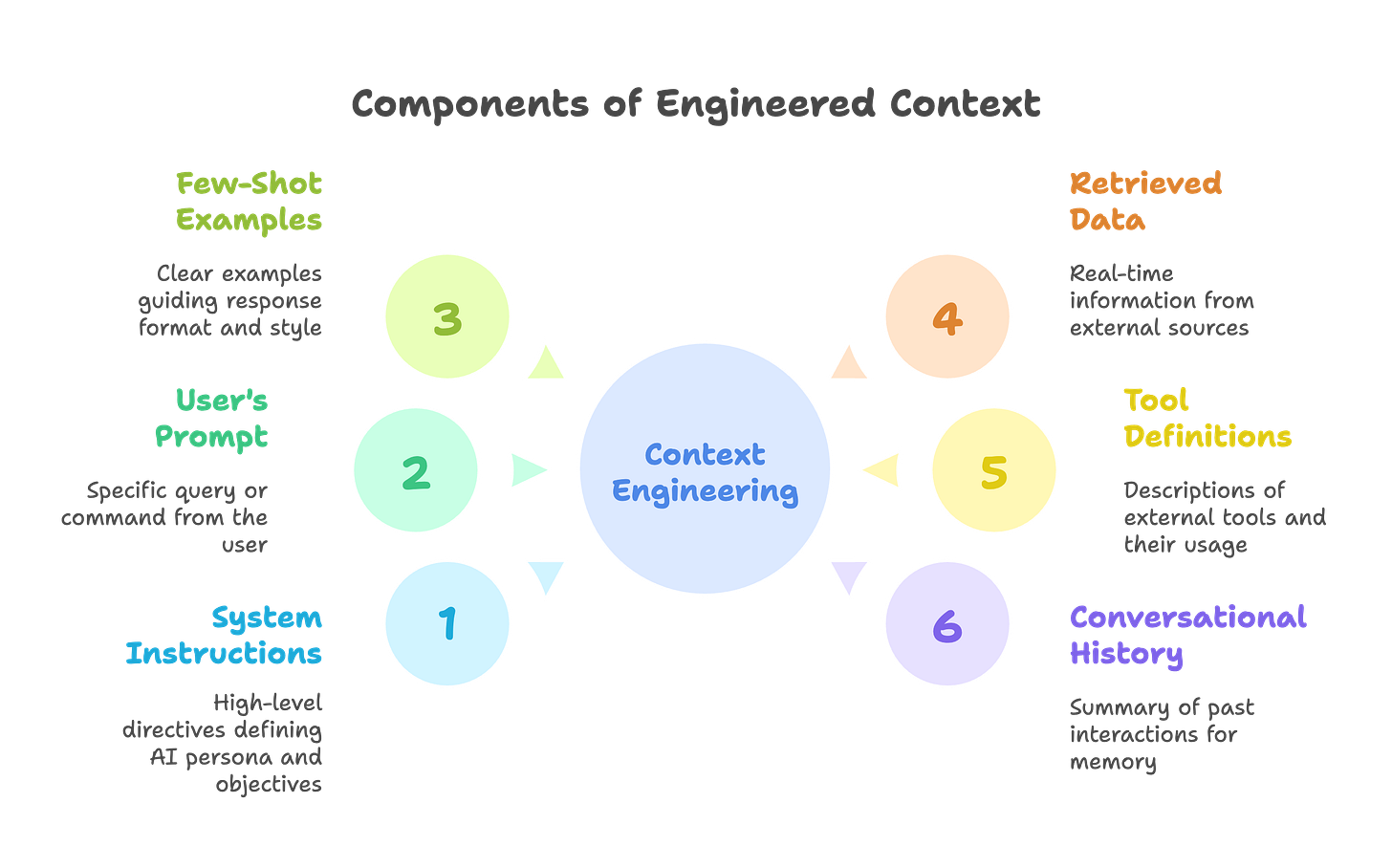

So, what does this "context" actually contain? It is the entire payload of information assembled by your system before the final prompt is sent to the model. This payload typically includes:

System Instructions: High-level directives that define the AI’s persona, its core objective, its constraints, and its safety guidelines.

The User's Prompt: The specific, immediate query or command from the user.

Few-Shot Examples: A handful of clear examples of desired inputs and their corresponding outputs to guide the model's response format and style.

Retrieved Data (RAG): Information pulled in real-time from external knowledge bases, databases, or documents to ground the model in facts it wasn't trained on.

Tool Definitions: A clear description of the external tools or functions the model can use, including their names, parameters, and when to use them.

Conversational History: A summary or transcript of the current interaction to provide memory and allow for follow-up questions.

In short, prompt engineering is what we do inside the context window. Context engineering is the work of deciding what fills that window in the first place. One is a tactic; the other is the strategy. For builders looking to create the next generation of AI applications, mastering that strategy is everything.

Excellent. Thanks.

As always, great writeup Vikash,

I think most products are already being built with these six components:

- One, two, and three always existed in the prompts(good prompts)

- Six: conversational history, is there.Products are being build to generate better context from them

- Four and five are how products are internally wired to respond to user prompts.

Do you think we will just start calling it just engineering?