How ML Ranking Algorithms Work

From Basics to Multi-Tower Models (two tower, three tower)

Ranking systems are everywhere, often without us even realizing it. Whenever there’s too much information or too many options, algorithms help sort, prioritize, and display results that are most useful. Below are some real-world examples of where ranking systems are used beyond just search engines:

E-commerce: Ranking products to show the most relevant or popular ones first.

Job Portals: Matching job seekers with job listings and ranking applicants for recruiters.

Healthcare: Recommending the most appropriate treatments or clinical trials for patients.

Travel Apps: Sorting hotels, flights, or rental options by price, location, or user preferences.

Music Streaming: Creating personalized playlists based on listening habits.

Food Delivery: Ranking restaurants based on delivery time, popularity, or user preferences.

Education Platforms: Recommending courses or study materials relevant to each student’s interests.

Whenever a system must prioritize one thing over another, a ranking algorithm is involved. This article will help you understand how these algorithms work and how multi-tower models improve ranking by matching queries, items, and user preferences.

TL;DR / Outline

This guide explains ranking algorithms, starting with a simple grocery store analogy to build intuition, and dives into multi-tower architectures (Two-Tower and Three-Tower models). It explores various real-world use cases of ranking systems, from e-commerce to healthcare, and discusses common challenges and solutions. A code appendix using PyTorch helps you implement your own Two-Tower model.

Grocery Store Analogy: A Simple Example of Ranking

Imagine you walk into a grocery store and ask for “snacks.” Instead of giving you all 1,000 snacks in the store, the manager tries to rank and recommend the best options based on a few key questions:

What’s popular right now? (trending snacks like chips and cookies).

Do you have specific preferences? (if the manager knows you prefer gluten-free options, they’ll recommend those).

Are there any new snacks you might enjoy? (recent arrivals or items you’ve never tried).

This is exactly what ranking algorithms do: They prioritize results based on multiple signals—like the query itself, popularity, personal preferences, and variety. But how do these systems process all this information efficiently? This is where Two-Tower and Three-Tower models come in.

Two-Tower Model: Query-Item Matching Architecture

The Two-Tower model is a common machine learning architecture used to match queries with items. Think of it like two employees at the grocery store—one who understands what you’re asking for, and another who knows everything about the available products. Together, they coordinate to find the best matches.

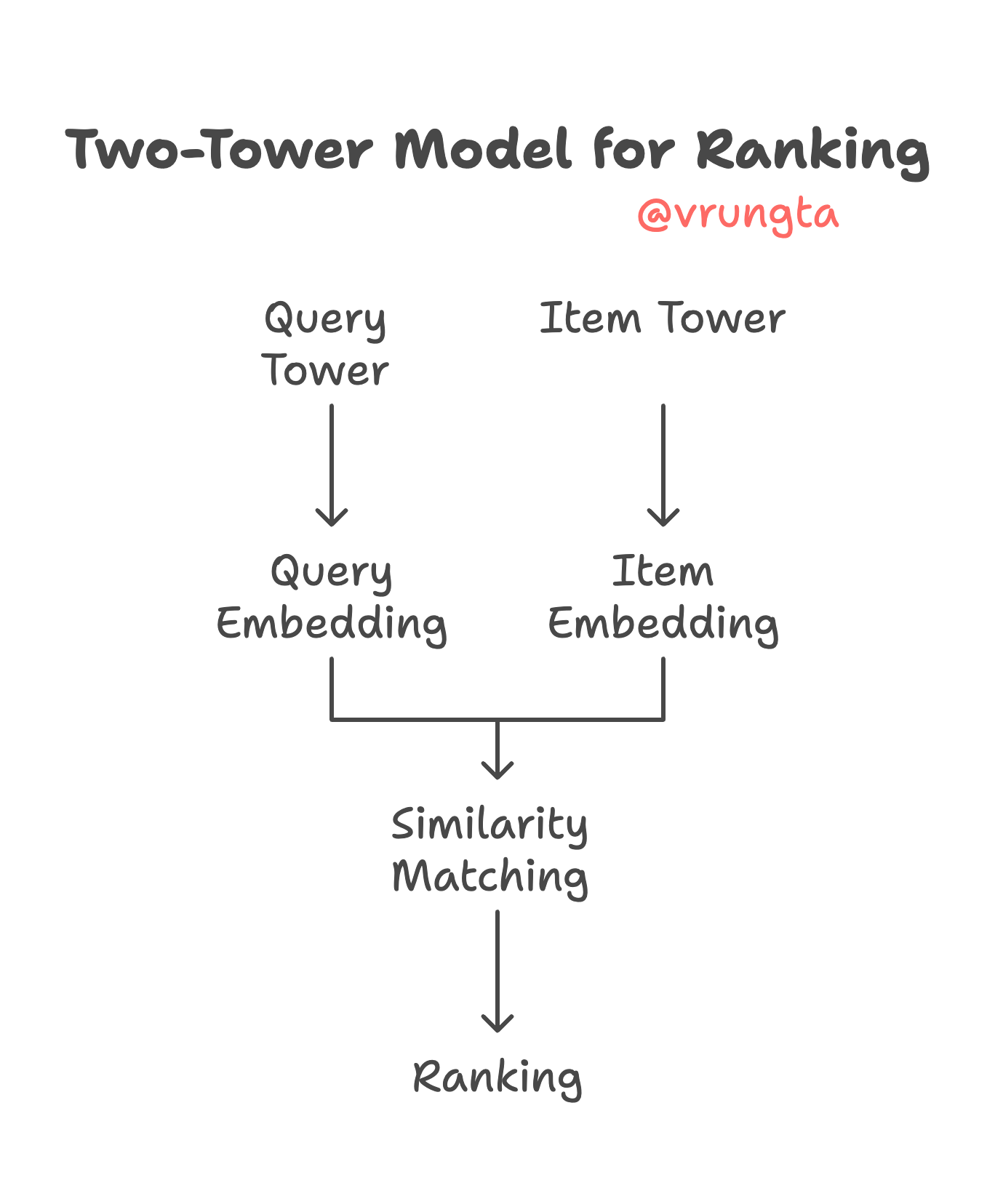

How the Two-Tower Model Works

The Two-Tower model processes queries and products separately using two neural networks (or “towers”). Each tower generates an embedding (a vector) to represent the query or item in a shared vector space. Items closer to the query in this space are considered more relevant and are ranked higher.

Query Tower:

Converts the search query (e.g., “gluten-free snacks”) into a query embedding.

This embedding captures the semantic meaning of the query.

Item Tower:

Converts product data (e.g., “organic popcorn”) into an item embedding.

These product embeddings are pre-computed and stored for efficient comparison.

Similarity Matching:

The query and item embeddings are compared using a similarity metric (like cosine similarity).

The system ranks items based on how similar they are to the query.

Real-World Use Cases for the Two-Tower Model

E-commerce: Matching search queries to products.

Travel Platforms: Matching flight or hotel searches to relevant results.

Job Portals: Matching resumes to job listings.

The Two-Tower model is powerful because product embeddings can be pre-computed, making the system fast and scalable. However, it doesn’t account for user-specific preferences—and this is where the Three-Tower model comes in.

Three-Tower Model: Adding Personalization

The Three-Tower model builds on the Two-Tower architecture by adding a User Tower, which processes user behavior and preferences. This allows the system to make personalized recommendations.

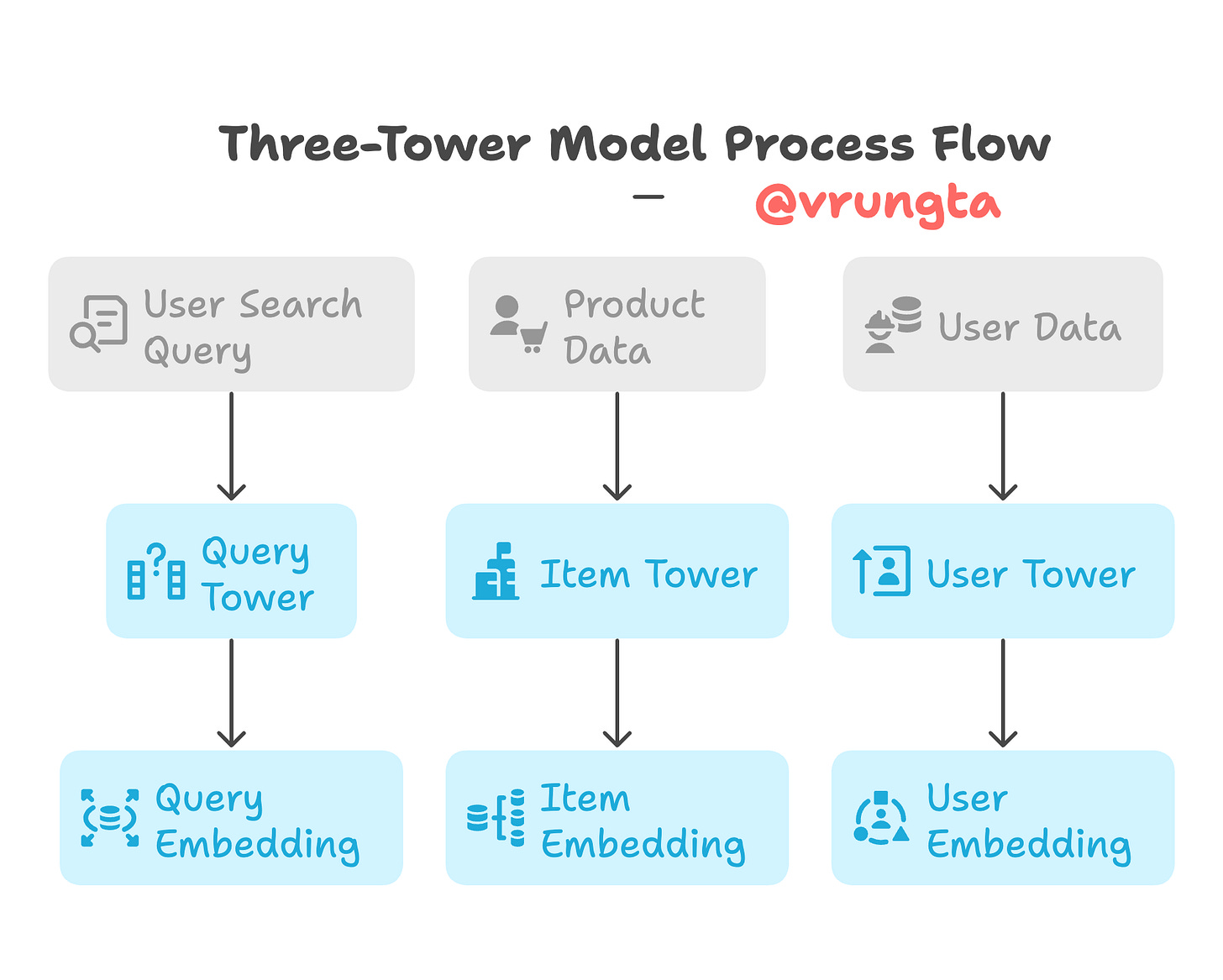

How the Three-Tower Model Works

Query Tower:

Converts the user’s search query into an embedding.

Item Tower:

Converts product data into an item embedding.

User Tower:

Generates a user embedding based on the user’s purchase history, preferences, or behavior.

The system combines the three embeddings (query, product, and user) to calculate the final relevance score for each item.

Grocery Store Analogy for the Three-Tower Model

Now, imagine the grocery store manager knows that you always buy vegan snacks. If you ask for “snacks,” the manager will prioritize vegan-friendly options even if other snacks are more popular. The Three-Tower model works the same way—your past behavior influences the search results, ensuring they align with your preferences.

Real-World Use Cases for the Three-Tower Model

Food Delivery Apps: Personalizing restaurant suggestions based on previous orders.

Streaming Platforms: Recommending content similar to what you’ve watched before.

E-learning Platforms: Suggesting courses based on a student’s learning history.

Challenges, Their Causes, and Practical Solutions

Cold Start Problem

Problem: New users or products lack enough data to generate meaningful recommendations.

Reason: Machine learning models rely heavily on historical data.

Solution: Use content-based features (like product descriptions) or transfer learning to predict preferences from similar users.Balancing Diversity vs. Relevance

Problem: Showing only the most relevant items reduces variety.

Reason: Popular items dominate rankings, crowding out niche options.

Solution: Introduce diversity constraints or use exploration strategies (e.g., multi-armed bandits) to surface less popular items.Real-Time Updates

Problem: Inventory or content changes may not reflect immediately in search results.

Reason: Pre-computed embeddings can become outdated.

Solution: Use dynamic embeddings or incremental updates to keep results fresh.Bias in Recommendations

Problem: Popular items receive disproportionate visibility, creating a feedback loop.

Reason: Algorithms may overfit to engagement metrics like clicks and views.

Solution: Apply fairness-aware ranking techniques to ensure diverse content gets surfaced.

Conclusion: Bringing It All Together

Ranking algorithms play a crucial role in search engines, recommendation systems, and e-commerce platforms. The Two-Tower model provides efficient query-to-item matching, while the Three-Tower model adds personalization by leveraging user behavior.

By mastering these architectures and addressing challenges like cold starts and bias, you can build systems that deliver fast, personalized, and relevant experiences. With tools like PyTorch, you can start building your own ranking models and experiment with real-world applications today.