Models Just Want to Learn - How Generative AI Evolves

Exploring How Compute, Data, Algorithms, and Responsibility Drive Generative AI's Continuous Improvement

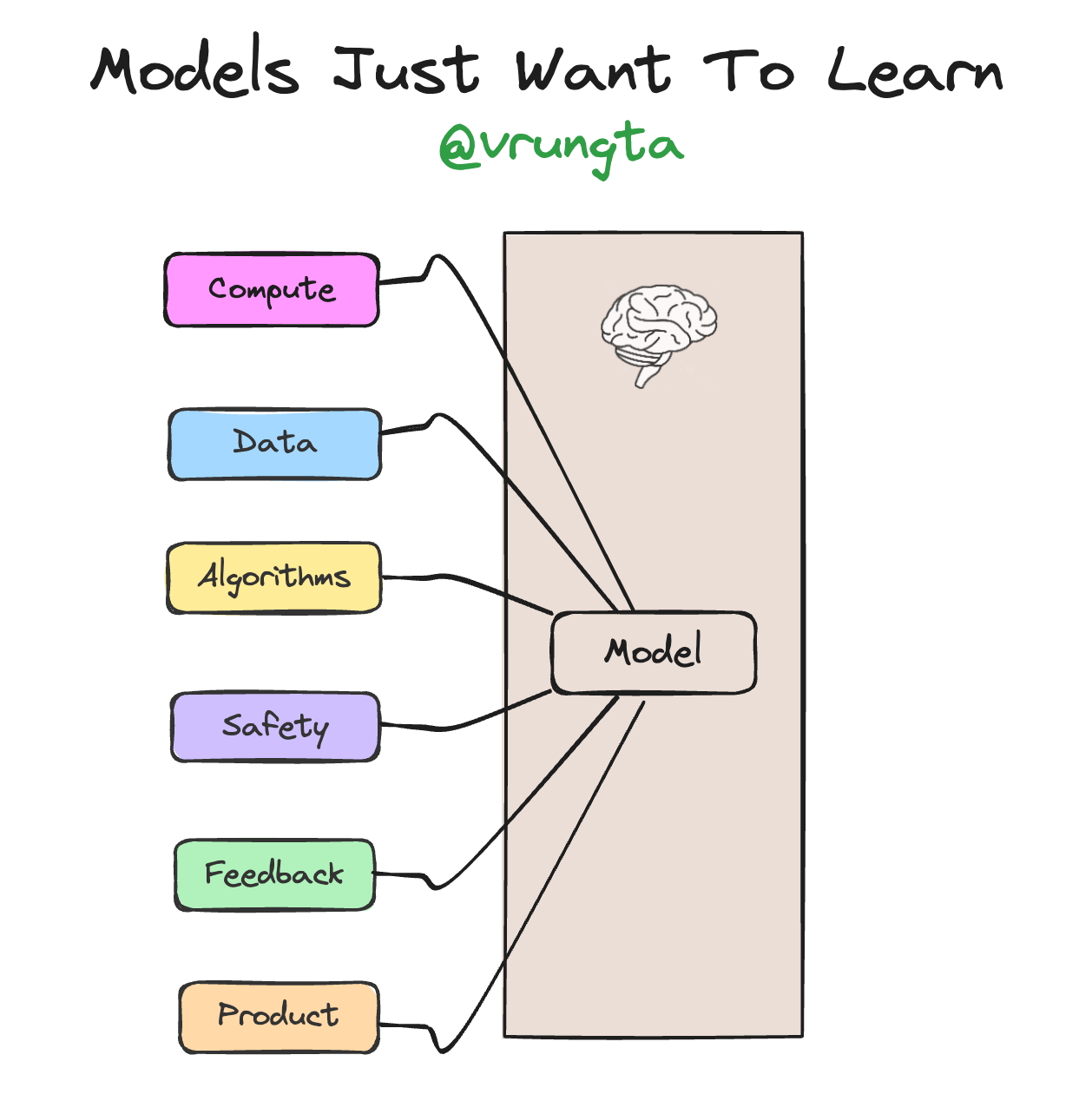

In the dynamic field of artificial intelligence, Ilya Sutskever, co-founder of OpenAI, made a profound statement: "models just want to learn." This simple yet powerful idea encapsulates the essence of foundational AI models and their inherent drive to improve. As we delve into the nature of AI learning, it's crucial to understand the pivotal roles of compute power, data, and algorithms in this continuous journey. Moreover, the goal of building responsible and safe models adds another layer to this pursuit. This article explores these elements, highlighting how they collectively propel the evolution of AI models and what this means for building the future.

"Models just want to learn." - Ilya Sutskever, co-founder of OpenAI

The Nature of Learning in AI Models

When we say that models "want to learn," we are anthropomorphizing a fundamental aspect of machine learning: optimization. Foundational models, such as GPT-3, BERT, LLAMA3, GPT-4o, and others, are designed to minimize error and maximize accuracy in their predictions. This process of optimization is akin to a relentless quest for improvement, driven by vast amounts of data and sophisticated algorithms. Models need more compute to learn, more and higher-quality data to learn, and better algorithms to learn. Additionally, models want to learn to be safe and responsible, incorporating mechanisms to avoid harmful behavior and bias.

Models need more compute to learn

"The computational resources required for training state-of-the-art models have been doubling every few months," notes Sam Altman, OpenAI CEO

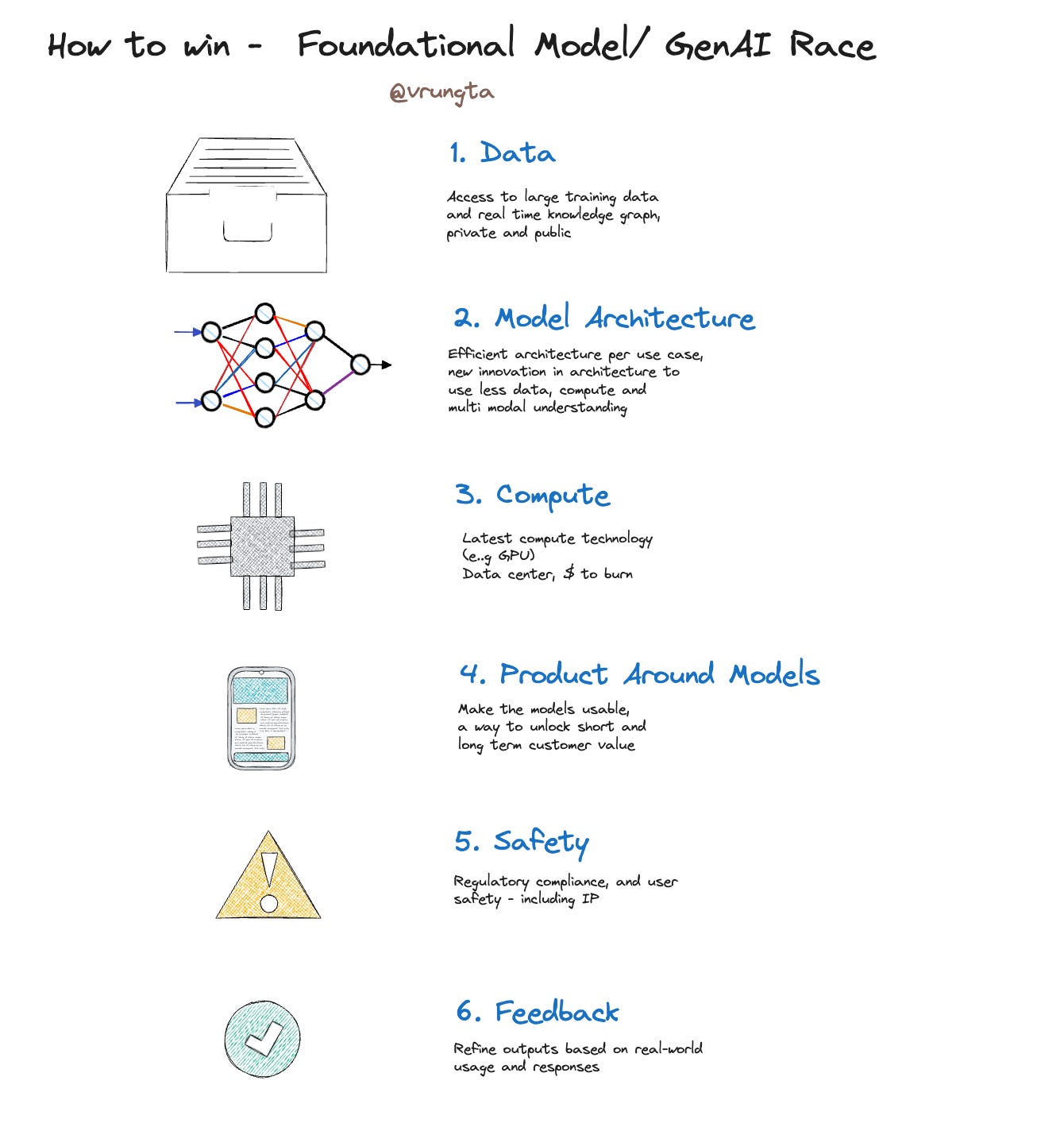

Compute power is the backbone of modern AI. The ability of a model to learn and improve is directly linked to the computational resources available. Models need more compute to learn, and advancements in hardware, from traditional CPUs to advanced GPUs and TPUs, have exponentially increased the speed and efficiency of training AI models. As models become more complex, the demand for compute power escalates. This is evident in projects like OpenAI's GPT-3, which required petaflop/s-days of compute to train. The advancement in compute power not only accelerates the training process but also enables the exploration of more sophisticated models.

Models need more and higher-quality data to learn

"We need better data, not just more data," Andrew Ng

Data is the lifeblood of AI models. The more data a model has access to, the better it can learn. However, it’s not just the quantity but the quality and diversity of data that matter. Models need more and higher-quality data to learn. Diverse datasets allow models to generalize better and perform well on a variety of tasks. "We need better data, not just more data," emphasizes Andrew Ng, an AI pioneer. Ensuring access to high-quality, varied data is essential for the continued improvement of AI models.

Models want better algorithms to learn

"Algorithmic improvements have consistently driven the biggest leaps in AI capability," Geoffrey Hinton

Algorithms are the engines that drive AI learning. Innovations in algorithm design have led to significant breakthroughs in model performance. Models want better algorithms to learn. Techniques such as transfer learning, reinforcement learning, and unsupervised learning have expanded the horizons of what AI can achieve. "Algorithmic improvements have consistently driven the biggest leaps in AI capability," says Geoffrey Hinton, an AI researcher. Ongoing research in algorithms continues to push the boundaries, making AI models more capable and efficient.

Models want to learn to be safe and responsible

"AI's future will be defined by our ability to balance power with ethical responsibility," Fei-Fei Li

As AI models become more powerful, it is crucial that they learn to be safe and responsible. This involves integrating ethical considerations into their design and training processes. Models want to learn to be safe and responsible by avoiding biases, ensuring fairness, and making ethical decisions. "AI's future will be defined by our ability to balance power with ethical responsibility," states Fei-Fei Li, an AI expert. Developing responsible AI involves rigorous testing, continuous monitoring, and incorporating feedback mechanisms to mitigate harmful outcomes.

Models want to learn from feedback

Demis Hassabis, Co-founder and CEO of DeepMind: "User feedback is invaluable in refining AI systems, helping them to learn and adapt to real-world needs."

Another crucial aspect of AI learning is the ability to learn from user feedback. This interactive process helps models refine their outputs based on real-world usage and responses. Models want to learn from user feedback to improve their relevance and accuracy continuously. User feedback loops are integral to adaptive learning, allowing models to correct errors and enhance their performance over time.

Models want better products to learn effectively

Jeff Dean, Google AI Chief: "AI has the potential to make many products and services better by integrating and understanding user feedback at scale, leading to continuous improvements."

To further enhance learning, it is essential to build products around AI models that can leverage conversation history or context. Models need to be integrated into practical applications where they can learn from real-time interactions. Models want better products to learn effectively. Products designed around AI models should focus on making the models usable and valuable for end-users. This includes developing interfaces that capture user interactions, allowing models to learn from conversation histories and context. Such integration helps models continuously improve their performance and provide better, more relevant results.

Conclusion

"Models just want to learn" succinctly captures the relentless drive of AI models towards better performance. By understanding the critical roles of compute, data, algorithms, and the importance of responsible learning, we gain insight into how these models evolve and improve. As we navigate this journey, it’s essential to consider the challenges and opportunities that lie ahead.

Building for the Future

Satya Nadella, CEO of Microsoft: "Our industry does not respect tradition—it only respects innovation. You need to obsess over customer feedback, engage deeply with your customers, and constantly iterate on your products based on their input." (Harvard Business Review).

Harnessing this knowledge can empower us to build a future where AI models are more effective and beneficial. Here are some ways to apply these insights:

Invest in Computational Resources: Support the development and access to advanced computing infrastructure to enable the training of more sophisticated AI models.

Champion Data Quality and Diversity: Advocate for the collection and use of high-quality, diverse datasets to enhance model generalization and fairness.

Foster Algorithmic Innovation: Encourage research and development in new algorithms that improve efficiency, effectiveness, and ethical considerations.

Prioritize Ethical AI Practices: Engage in discussions and initiatives that promote responsible AI development, addressing issues such as privacy, bias, and environmental impact.

Incorporate User Feedback: Develop mechanisms for continuous feedback integration to improve model performance and relevance.

Develop Products Around AI Models: Create applications that utilize conversation history and context to enhance model learning and user experience.

By focusing on these areas, we can contribute to a future where AI models not only learn but also learn responsibly and effectively, driving progress across various fields and industries.