Test-Time Compute: Rethinking AI Scaling

How Dynamic Inference is Changing the Game for AI Efficiency and Capability

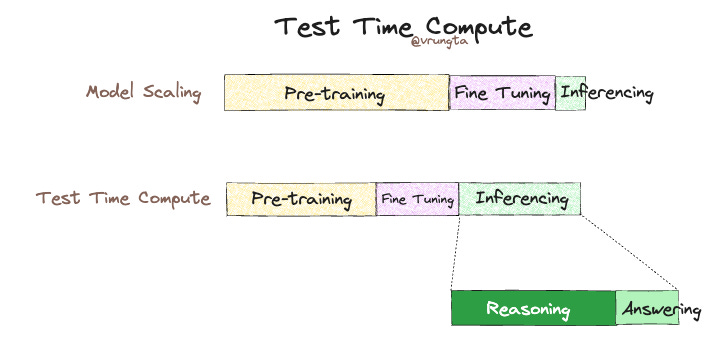

For years, the roadmap for AI advancement has focused on scaling models—making them bigger, with more data and more computing power. But this approach has started to hit a wall. As we push the limits of data resources, energy, and compute infrastructure, the industry is realizing that "bigger is better" has a serious downside. Models now require vast amounts of resources to produce relatively modest gains, leading AI researchers and companies like OpenAI to rethink their approach.

Enter test-time compute, a new method that shifts the focus from endlessly scaling up to deploying compute power more intelligently at inference time—the phase where AI models generate responses or make predictions. OpenAI's latest "o1" model, which operates on this principle, offers a glimpse of what this smarter, resource-efficient future could look like.

The Problem with Pure Scaling

In the last decade, model scaling—primarily adding more parameters, data, and compute resources—has driven some of the most notable breakthroughs in AI. This was evident in models like OpenAI’s GPT-4 and other large language models, which saw significant improvements with each leap in model size and computational input. However, scaling has hit several major obstacles:

High Costs: Operating larger models is expensive, with training runs that cost millions of dollars and demand cutting-edge infrastructure.

Data Limitations: AI models rely on vast amounts of data to improve. However, high-quality, labeled data is finite, and models are quickly exhausting easily accessible resources.

Environmental Impact: Increasing model size also means increasing energy consumption, which raises the environmental costs of AI and makes massive models harder to justify from a sustainability perspective.

Diminishing Returns: The gains from scaling are increasingly marginal. As Ilya Sutskever, an influential AI researcher, noted, the initial era of scaling yielded transformative results, but improvements are now flattening out.

With these limitations in mind, researchers are looking to maximize the potential of existing models rather than continuously scaling up. This is where test-time compute offers a new pathway.

What is Test-Time Compute?

Unlike training-time compute, which occurs during a model's initial learning phase, test-time compute refers to the computational effort used during inference, or when the model is actively deployed to answer questions, generate text, or complete tasks. Test-time compute allows a model to adjust its computational resources dynamically, based on the complexity of each task.

Here’s how it works:

Dynamic Problem Solving: For simpler questions, the model can deliver a response quickly with minimal computational power. But for more complex tasks—like detailed problem-solving or multi-step reasoning—it can engage additional compute resources, evaluating multiple possible answers before selecting the most accurate one.

Iterative Refinement: Instead of generating a single response in one go, the model can take time to refine its answer through multiple steps. OpenAI’s o1 model, for instance, incorporates this process to “think” through challenges in a way that resembles human reasoning, especially useful in tasks that require nuanced understanding, like intricate coding or contextual analysis.

By adjusting compute resources based on the problem’s difficulty, test-time compute allows models to achieve high accuracy without requiring a massive parameter scale-up.

The Smarter Scaling Approach in Action: OpenAI’s o1 Model

OpenAI’s o1 model, recently launched as an advanced application of test-time compute, exemplifies how this approach can unlock smarter, more efficient AI. Unlike traditional models that deliver a response after a single pass, the o1 model can analyze complex queries with multiple computational steps, choosing the best path among possible solutions.

For instance, in tasks involving math problems, logic puzzles, or strategic decision-making, the model doesn’t settle for the first answer that comes to mind. Instead, it dedicates additional processing power to review and verify its responses, boosting accuracy without the need for extensive pre-training or a larger model size.

This approach has demonstrated impressive results. As OpenAI researcher Noam Brown explained, a model using extra compute at test time can perform as effectively as a much larger model trained intensively on similar tasks. This means that with the right compute strategy, we can achieve high performance without the heavy costs and infrastructure associated with large-scale training.

Practical Implications of Test-Time Compute

The shift towards test-time compute brings practical advantages for deploying AI in real-world applications:

Cost-Efficiency: By dynamically scaling compute resources, organizations can achieve high accuracy without massive, expensive infrastructure. This could make advanced AI more accessible to companies that may not have the budget for extensive scaling.

Scalability and Accessibility: Models that rely on test-time compute can be deployed across a broader range of environments, from cloud-based systems to mobile devices. Instead of requiring constant high-power hardware, these models can adjust their requirements, making them flexible and scalable.

Sustainability: Reducing the demand for extensive training infrastructure aligns with more sustainable AI practices. Test-time compute allows models to use energy more efficiently, minimizing waste and reducing the carbon footprint of AI development.

World Models: A Complementary Approach to Smarter AI

As the AI field moves towards smarter processing strategies, another concept gaining traction is the world model. This idea, which Yann LeCun discussed in his recent lecture on AI intelligence, focuses on equipping AI systems with internal models of the world, allowing them to reason in more human-like ways.

A world model enables an AI to understand not just how to complete a task, but also why certain outcomes might be preferable, based on an internal representation of context and causality. This goes beyond test-time compute, which improves resource efficiency, by adding another layer of interpretative power.

In essence, combining test-time compute with a robust world model could create AI systems that are not only more resourceful but also more insightful. For complex, multi-step problems, such a model could deliver even more nuanced answers by reasoning through its knowledge of the world.

For those interested in a deeper dive into world models and their potential role in AI reasoning, Yann LeCun’s lecture, “How Could Machines Reach Human-Level Intelligence?” is a great resource here.

The Future of AI: Scaling Smarter, Not Bigger

The test-time compute approach represents a paradigm shift in AI. Rather than following the conventional path of adding more data and more parameters, researchers are exploring how to make AI “think harder” instead of just “think bigger.” This allows models like o1 to handle complex challenges dynamically, using compute power only where it matters most.

As AI continues to expand across industries, test-time compute offers a promising way to maximize performance while keeping costs and environmental impacts in check. By moving from a scaling-focused approach to a compute-efficient strategy, AI can become more adaptable, accessible, and effective.

In this new era of AI, it’s not just about building the biggest model. It’s about building the smartest, most resourceful model possible. And with test-time compute leading the way, that future may be closer than we think.

OpenAI release O3 recently, which seems to be similar in architecture with more reasoning time compute thrown at the problem with some better search algo for reasoning.